5 Build Applications with LLMs and AI Agents

5.1 Understanding API vs. Chat in AI Tools

Many of you have already used AI chat tools like ChatGPT or Gemini Chat to ask questions, write code snippets, or brainstorm ideas. That’s the user experience — you’re communicating with the model to get answers.

But when you start using LLMs and AI agents for development, the perspective changes completely. Instead of talking to the AI, you’re now building with it. You’re no longer just a user — you become a developer who leverages the model’s power through APIs to create tools, automate workflows, or build intelligent apps for others to use.

The table below compares OpenAI’s ChatGPT and Google’s Gemini platforms, highlighting the difference between their APIs (used by developers to build applications) and their chat interfaces (used by end users to interact with the models).

| Aspect | OpenAI API | ChatGPT (OpenAI) | Gemini API | Gemini Chat |

|---|---|---|---|---|

| Type | Developer API | Chat Interface | Developer API | Chat Interface |

| Purpose | Build apps or tools using GPT models (e.g., GPT-4). | Chat directly with GPT models for writing, coding, or learning. | Build apps or workflows using Gemini models. | Chat naturally with Gemini for assistance or ideas. |

| How It’s Used | Through code (Python, JS, etc.). | Through a chat interface (web or app). | Through code (Google AI Studio or Vertex AI). | Through chat (Gemini web app, Workspace integration). |

| Users | Developers, researchers. | General users, educators, professionals. | Developers, data teams. | Students, creators, general users. |

| Example Use | A developer calls GPT-4 via API to generate summaries or analyze data. | You ask “Explain this regression code.” | A data app uses Gemini API to summarize Google Sheets data. | You ask Gemini, “Visualize this dataset for me.” |

| Output Control | Fully customizable — responses formatted via code. | Natural text output in chat. | Fully customizable — can return text, JSON, or structured data. | Conversational responses, plain text or visuals. |

| Goal | Build with the model. | Talk to the model. | Build with the model. | Talk to the model. |

- APIs are for developers — you use them to build apps with AI features for the end users.

- Chat tools are for the end users — you chat with the model to get answers.

- OpenAI powers ChatGPT; Google powers Gemini; different ecosystems.

5.2 Lab: Enhance Your App with LLM-Powered Interpretation

In this lab, you will continue building your financial dashboard by integrating AI features with Google Gemini API.

5.2.1 Get Started with Gemini API

Please sign in to Google AI Studio using your Google account https://ai.google.dev/aistudio.

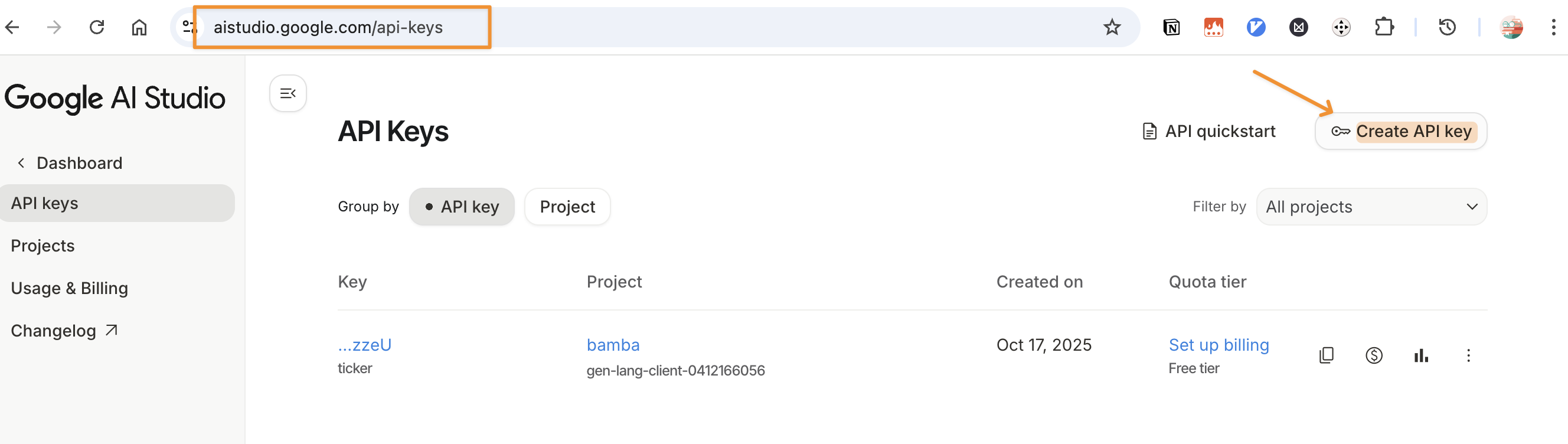

5.2.2 Create your Google Gemini API Key

Google has a free tier for the Gemini API that provides limited usage for development and experimentation.

You can obtain a free API key using your Google account:

Please delete your key if not in use. Keep it secure, and never share or publish your key.

5.2.3 Lab: Run A Simple Gemini Use Case

Check out the page <https://aistudio.google.com/app/> for a simple use case of how to use Gemini API. You can easily create a Colab notebook and test the sample code there — since both Gemini and Colab are Google products, they integrate seamlessly for quick experimentation.

You only need to modidy the following line with your api_key to run the sample code.

client = genai.Client(api_key="your_actual_api_key_here")5.2.4 Prompt engineering

A prompt is the input text or query given to a generative AI model to instruct it on what to do.

e.g., “Write a Python function to calculate the average of a list.”

In simple terms, prompt engineering is about communicating effectively with AI. It’s the skill of writing prompts so the large language models (LLMs) understands your intent, context, and background, and can respond accurately and usefully.

In other words:

Prompt engineering = communication skills with LLMs.

You can explore prompt ideas for the Gemini API in Google AI studio. https://ai.google.dev/gemini-api/prompts

5.2.5 Bad vs Good Prompts

Here is a number of examples showing how vague prompts differs from well-engineered ones. AI can understand and act only as well as you communicate.

| Task | Bad Prompt | Good Prompt | Why It’s Better |

|---|---|---|---|

| Ask for code | “Write some Python code for data.” | “Write a Python function using pandas to read a CSV file, clean missing values, and calculate the average of each column.” |

Gives clear context, specific goal, and tools to use. |

| Explain concept | “Explain machine learning.” | “Explain what machine learning is for a high school student using everyday examples.” | Specifies audience and tone, making the response more focused. |

| Generate text | “Write about climate change.” | “Write a 3-paragraph summary of climate change causes and impacts, suitable for a classroom presentation.” | Defines length, scope, and audience. |

| Data science task | “Analyze this dataset.” | “Analyze this dataset to find the top 5 products by sales, and visualize the trend over time using matplotlib.” | Specifies the goal, method, and output format. |

5.2.6 Lab: Design a Value-Adding AI Prompt for Your Dashboard

Your financial metrics dashboard already allows users to select tickers and view several metrics. Now, your goal is to design a prompt that integrates a LLM from Gemini to provide AI-generated insights that add value for the users of the dashboard.

5.3 Lab: Add an AI Agent to your Dashboard (optional)

5.3.1 What Is an AI Agent?

An AI Agent is a system that uses an AI model—often a Large Language Model (LLM)—to reason, decide, and take actions toward a goal. Instead of just producing text, an agent can plan what to do next, call external tools (like APIs or databases), and use the results to continue reasoning. In other words, it’s not just “answering questions,” it’s doing things.

Think of an AI agent as an intelligent assistant that:

- Understands your goal (through natural language),

- Decides what steps to take,

- Executes tools or code to gather information or perform tasks,

- Reflects on results, and produces a final answer or output.

5.3.2 Difference between LLM and AI Agent

A Large Language Model (like Gemini, GPT, or Claude) is a text reasoning engine. It’s trained to predict and generate text based on patterns in language.

An LLM by itself doesn’t have access to the outside world. For example, if you ask it about today’s news, it may not know the answer because its knowledge stops at a fixed cutoff date. Here are some knowledge cut-off dates for popular LLM to illustrate what they mean:

- GPT‑4 (by OpenAI): Knowledge cutoff around April 2023.

- GPT‑4o (OpenAI): Reported knowledge cutoff around October 2023.

- Gemini 2.0 Flash (by Google DeepMind): Knowledge cutoff reported around August 2024.

- Claude 3.5 Sonnet (by Anthropic): Knowledge cutoff around April 2024.

| Concept | What it Does | Example |

|---|---|---|

| LLM | Generates and understands text | Answer “What is AI?” |

| AI Agent | Uses the LLM’s reasoning plus tools or actions to achieve goals | Fetch news for AAPL, analyze it, summarize findings |

A LLM can’t directly fetch news, query APIs, or control apps—it only works with text it’s given. That’s where agents come in. Nowadays, many modern LLMs are becoming agentic — meaning they can not only generate text but also decide when and how to use external tools. These models can fetch real-time information, call APIs, write and run code, and even plan multi-step reasoning tasks.

In this mini exercise, you’ll see how an mini AI agent can make simple decisions before taking action. The agent will use an LLM to reason about the company’s sector — if the selected ticker belongs to an IT or technology company, the model will decide to fetch the latest news using yfiance tools. Otherwise, it will decide to do nothing and respond that no action is needed. This simple logic from checks, decides, acts or not, illustrates how agents can combine reasoning and tool use to behave intelligently, even in small, rule-based workflows.

# mini_agent_two_actions.py

# pip install yfinance google-genai

# you may test run it in Colab to start

# then revise and consider embed it in your streamlit app.

import os, json, yfinance as yf

from google import genai

client = genai.Client(api_key="your--google--api")

MODEL = "gemini-2.0-flash"

def fetch_news(ticker: str, limit: int = 5):

"""Tool: get latest news for a ticker using yfinance."""

try:

news = yf.Ticker(ticker).news or []

except Exception:

news = []

items = []

for n in news[:limit]:

item_data = {

"title": n.get("content", {}).get("title"),

"link": n.get("content", {}).get("clickThroughUrl"),

}

# Filter out entries with no title and link

if item_data.get("title") and item_data.get("link"):

items.append(item_data)

print(items) # Keep print for debugging as in original code

return items

def decide_action(ticker: str):

prompt = f"""

You can take ONE of two actions for a given stock ticker.

1. fetch_news — if the ticker belongs to an IT or technology company

(e.g., AAPL, MSFT, GOOG, META, NVDA, AMZN, etc.)

2. none — for any other sector or if you're unsure.

Return STRICT JSON only:

{{"action": "fetch_news" | "none", "args": {{"ticker": "<T>", "limit": <int>}}}}

Use ticker="{ticker}".

"""

resp = client.models.generate_content(

model=MODEL,

contents=prompt,

config={"response_mime_type":"application/json"}

)

return json.loads(resp.text)

def final_answer(ticker: str, action: str, items=None):

if action == "none":

return f"The ticker **{ticker}** is not in the IT sector — no action taken."

prompt = f"""

Summarize the latest news for {ticker} using ONLY this JSON list:

{json.dumps(items, indent=2)}

Return short markdown:

- 2–3 key insights

- One-line sentiment (Positive/Neutral/Negative)

- Sources as bullet links: [Publisher — Title](URL)

"""

resp = client.models.generate_content(

model=MODEL,

contents=prompt,

config={"response_mime_type": "text/plain"}

)

return resp.text

def run(ticker="AAPL"):

plan = decide_action(ticker)

action = plan.get("action", "none")

args = plan.get("args", {})

if action == "fetch_news":

items = fetch_news(args.get("ticker", ticker), int(args.get("limit", 5)))

answer = final_answer(ticker, action, items)

else:

answer = final_answer(ticker, action)

print(answer)

if __name__ == "__main__":

#run("AAPL") # should fetch news

run("TLT") # try a non-IT ticker for "none" action